View Other Case

Studies

View Other Case

Studies

Lead Product Designer

Agents spent time navigating across tabs to find answers, increasing resolution time.

Introduced an inline, AI-generated answer experience within search results—contextual, permission-aware, and scalable

Reduced average time-to-resolution, improved agent satisfaction, and accelerated Einstein Search adoption across enterprise teams.

Service agents waste time navigating through long article lists or fragmented knowledge sources to find relevant answers. Keyword-based search often returns irrelevant or insufficient results, reducing confidence and speed. The existing Einstein Search system provides results based on relevance, but article titles and snippets often fail to convey whether an article truly solves the case. This results in inefficiencies and erodes agent trust in AI-powered assistance

Einstein Search envisions delivering an intelligent, personalized search experience that

helps all Salesforce users—across roles and personas—quickly find the right information

without friction. Its goal is to make search natural and context-aware, enabling employees

throughout the organization to work smarter and faster.

Within this vision, we focused primarily on the Case Solver persona—service agents who

resolve customer issues daily and rely heavily on search to find relevant answers. The

Generative Search Answers feature extracts and synthesizes information from multiple

sources, including knowledge articles and structured CRM data, to provide AI-generated

summaries that surface directly within their workflow.

Our business goal was to accelerate case resolution, improve agent productivity, and enhance

customer satisfaction by embedding these trusted AI-powered answers seamlessly into search

experiences across channels like the Case Console, Experience Cloud, and collaboration

platforms.

Our UX strategy closely aligned with the overarching product vision of delivering accurate,

AI-powered answers across Salesforce, but with a clear focus on the Case Solver persona at

the start. While the PM’s initial scope targeted providing relevant answers directly in

search results as a foundational use case, I envisioned the feature’s potential to extend

far beyond—enabling AI-powered, context-aware information delivery across multiple user

scenarios and channels.

From day one, we aimed to test the waters with a focused, incremental approach—validating

what we could achieve technically and from a UX perspective before broadening the feature’s

reach. In collaboration with the PM and other stakeholders, I initiated discussions around

diverse real-world use cases where surfacing the right information upfront—without forcing

users to sift through, compare, or validate multiple sources—could significantly improve

daily workflows.

This approach balanced immediate product goals with a long-term vision to create a scalable,

trust-worthy AI search assistant that not only serves service agents but any employee

seeking fast, accurate, and relevant answers.

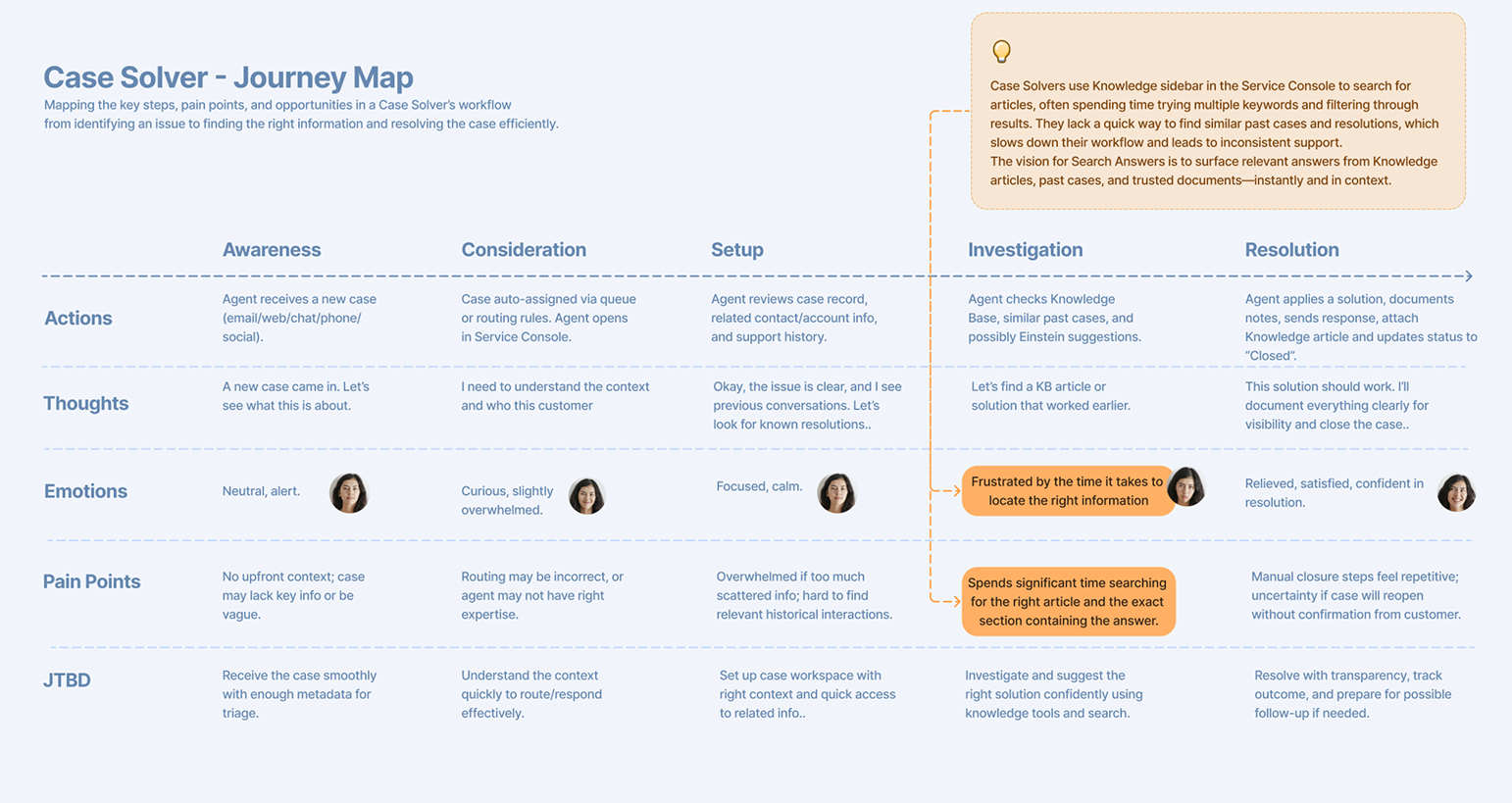

We began by focusing on the Case Solver persona, as service agents play a critical role in Salesforce’s Service Cloud ecosystem and represent a key CRM user group. To deeply understand their daily workflows, I researched and mapped the typical case resolution journey, which involves several stages:

I conducted interviews with internal case solvers to understand how they interact with Einstein Search during these steps, particularly how they search for knowledge articles to resolve cases. The existing keyword-based search often returned long lists of articles where titles and snippets did not always convey the right solution, causing friction and delays. Mapping this journey helped identify key pain points and opportunities where generative AI could deliver concise, trustworthy answers, minimizing search effort and accelerating case resolution.

Before diving into solution design, I focused on deeply understanding the problem from the

perspective of service agents. I immersed myself in their journey—observing how they used

Einstein Search to find knowledge articles and solve cases, mapping out each step to reveal

pain points around discovery friction, irrelevant results, and trust in AI outputs.

Working closely with the PM, I synthesized qualitative insights from interviews and user

observations into clear problem statements and “How Might We” questions that framed our

design focus.

This included identifying where agents struggled to find precise answers

quickly and where AI could add real value.

I also helped create visual journey maps and frameworks that articulated these challenges,

aligning cross-functional teams (product, research, engineering) around a shared vocabulary

and understanding. This foundation ensured that design efforts would be targeted and

strategic.

Key user goals emerged:

The search experience in Salesforce isn’t generic—it must be tightly integrated with

workflows. Our approach demanded a native, trusted, and frictionless experience that

respected how agents worked while enhancing it with intelligent suggestions.

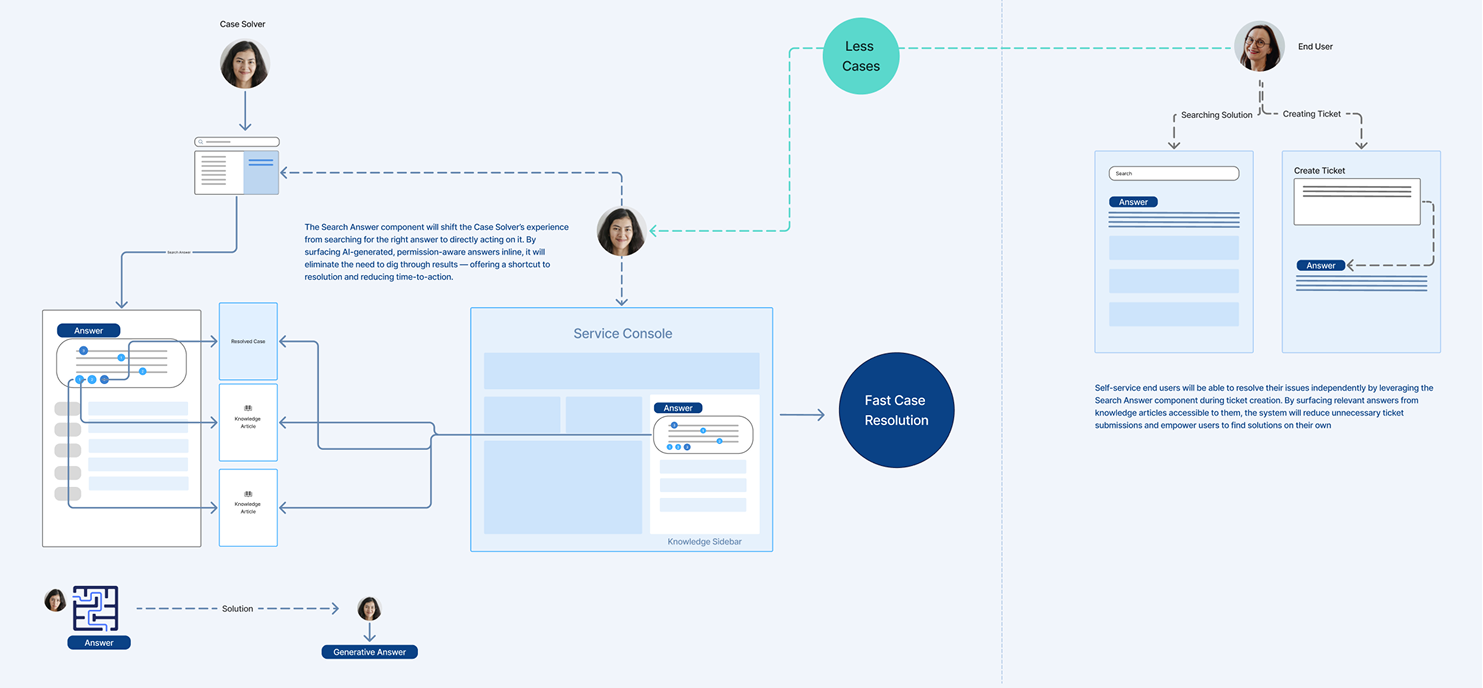

During this phase, another insight emerged: while we initially focused on improving the case

investigation step for agents, similar friction existed for end customers trying to solve

issues on self-service portals. To address this, I expanded the problem definition to

consider opportunities for early resolution before a case is even logged. This included

experimenting with ways to integrate AI-generated answers directly into customer-facing

search and ticket submission flows—potentially deflecting cases altogether.

By the end of Define, we had a clear and expanded problem statement, aligned stakeholders,

and a refined strategy that included internal and external user touchpoints.

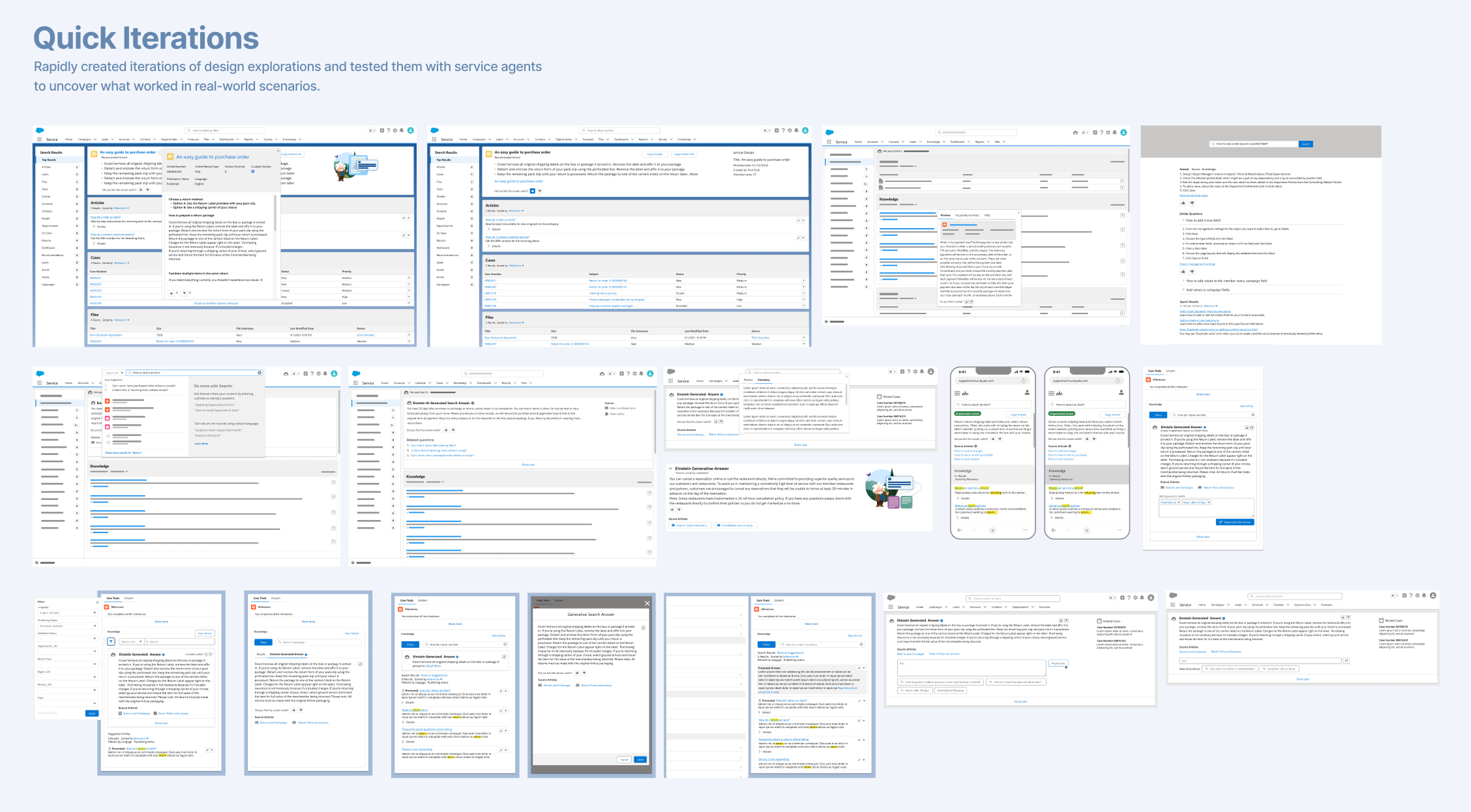

In the ideation phase, I collaborated closely with the PM, researcher from Usability Sciences, and internal stakeholders to generate and evaluate multiple design directions. We rapidly explored concepts to understand how generative answers could best serve service agents in real-world workflows.

Early usability sessions with agents revealed a key insight: even when the generated answers were accurate, they were often skipped in favor of traditional knowledge article results. The placement and visual styling of the generative response made it feel like a banner—something promotional or secondary—reducing its perceived authority.

Through multiple rounds of feedback and design sketching, we uncovered important behavior patterns:

These insights guided our decision-making and shaped the direction of what we’d refine in the design phase.

We also began to envision broader use cases beyond agent-facing search. With the goal of reducing friction across the support journey, I proposed early concepts for extending generative answers into:

These ideas were sketched and tested at a low-fidelity level with partner customers, who responded positively—seeing potential to improve resolution rates and reduce support volume.

Transform validated insights into a seamless, trustworthy UI that enhances case investigation through AI-powered answers. Building on research and iterative ideation, I translated learnings into high-fidelity mockups and interactive prototypes. The core design challenge was to ensure that the AI-generated answer felt like the most relevant and trustworthy reply, seamlessly integrated into the agent's flow.

Key design actions:

These decisions helped shift user behavior—agents became more likely to pause, read, and use the AI-generated answers rather than defaulting to traditional search results. I collaborated closely with engineering to ensure designs were feasible and scalable, especially as we prepared for GA release. Prototypes were also used in stakeholder demos and internal buy-in sessions to communicate the vision and drive alignment.

As we improved the core experience, our product vision evolved toward a future where right

information is accessible across all channels and formats, leveraging the power of the

Search Answer engine beyond traditional search interfaces.

I envisioned the search engine acting as an active participant in collaborative

environments—for example, integrated into Slack channels—where users can ask questions in

natural language and receive AI-generated answers in real time based on the ongoing

conversation.

Understanding that users seek answers not only through text queries but also via images and

multimedia, I explored design concepts enabling users to upload images to support their

questions—for instance, uploading a photo of a printer model to get the correct driver

information.

Further, recognizing Salesforce’s existing capabilities to transcribe customer meeting

videos into text, I proposed marrying these features with Generative Search Answers. This

integration could enable extracting answers directly from video content, significantly

broadening the scope of AI-powered knowledge retrieval with minimal additional development

effort.

This vision positions Generative Search Answers as a versatile, omni-channel AI assistant

embedded in everyday workflows—helping users find trusted information anytime, anywhere, in

the most natural way possible